diff --git a/.github/workflows/main.yml b/.github/workflows/main.yml

index 7d1c7d01c..6cecc9fea 100644

--- a/.github/workflows/main.yml

+++ b/.github/workflows/main.yml

@@ -46,11 +46,11 @@ jobs:

- name: pylint check

run: |

- python -m pylint src/gstools/

+ python -m pylint src/gstools_cython/

- name: cython-lint check

run: |

- cython-lint src/gstools/

+ cython-lint src/gstools_cython/

build_wheels:

name: wheels for ${{ matrix.os }}

@@ -76,7 +76,7 @@ jobs:

path: ./dist/*.whl

build_sdist:

- name: sdist on ${{ matrix.os }} with py ${{ matrix.ver.py }} numpy${{ matrix.ver.np }} scipy${{ matrix.ver.sp }}

+ name: sdist on ${{ matrix.os }} with py ${{ matrix.ver.py }} numpy${{ matrix.ver.np }}

runs-on: ${{ matrix.os }}

strategy:

fail-fast: false

@@ -84,19 +84,19 @@ jobs:

os: [ubuntu-latest, windows-latest, macos-13, macos-14]

# https://github.com/scipy/oldest-supported-numpy/blob/main/setup.cfg

ver:

- - {py: '3.8', np: '==1.20.0', sp: '==1.5.4'}

- - {py: '3.9', np: '==1.20.0', sp: '==1.5.4'}

- - {py: '3.10', np: '==1.21.6', sp: '==1.7.2'}

- - {py: '3.11', np: '==1.23.2', sp: '==1.9.2'}

- - {py: '3.12', np: '==1.26.2', sp: '==1.11.2'}

- - {py: '3.12', np: '>=2.0.0rc1', sp: '>=1.13.0'}

+ - {py: '3.8', np: '==1.20.0'}

+ - {py: '3.9', np: '==1.20.0'}

+ - {py: '3.10', np: '==1.21.6'}

+ - {py: '3.11', np: '==1.23.2'}

+ - {py: '3.12', np: '==1.26.2'}

+ - {py: '3.12', np: '>=2.0.0rc1'}

exclude:

- os: macos-14

- ver: {py: '3.8', np: '==1.20.0', sp: '==1.5.4'}

+ ver: {py: '3.8', np: '==1.20.0'}

- os: macos-14

- ver: {py: '3.9', np: '==1.20.0', sp: '==1.5.4'}

+ ver: {py: '3.9', np: '==1.20.0'}

- os: macos-14

- ver: {py: '3.10', np: '==1.21.6', sp: '==1.7.2'}

+ ver: {py: '3.10', np: '==1.21.6'}

steps:

- uses: actions/checkout@v4

with:

@@ -110,21 +110,18 @@ jobs:

- name: Install dependencies

run: |

python -m pip install --upgrade pip

- pip install build "coveralls>=3.0.0"

+ pip install build

- - name: Install GSTools

+ - name: Install GSTools-Cython

env:

GSTOOLS_BUILD_PARALLEL: 1

run: |

pip install -v --editable .[test]

- name: Run tests

- env:

- GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

run: |

- pip install "numpy${{ matrix.ver.np }}" "scipy${{ matrix.ver.sp }}"

- python -m pytest --cov gstools --cov-report term-missing -v tests/

- python -m coveralls --service=github

+ pip install "numpy${{ matrix.ver.np }}"

+ python -m pytest -v tests/

- name: Build sdist

run: |

@@ -136,6 +133,39 @@ jobs:

with:

path: dist/*.tar.gz

+ coverage:

+ name: coverage

+ runs-on: ubuntu-latest

+

+ steps:

+ - uses: actions/checkout@v4

+ with:

+ fetch-depth: '0'

+

+ - name: Set up Python 3.9

+ uses: actions/setup-python@v5

+ with:

+ python-version: 3.9

+

+ - name: Install dependencies

+ run: |

+ python -m pip install --upgrade pip

+ pip install "coveralls>=3.0.0"

+

+ - name: Install GSTools-Cython

+ env:

+ GSTOOLS_CY_COV: 1

+ run: |

+ pip install -v --editable .[test]

+

+ - name: Run tests

+ env:

+ GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

+ run: |

+ pip install "numpy${{ matrix.ver.np }}"

+ python -m pytest --cov gstools_cython --cov-report term-missing -v tests/

+ python -m coveralls --service=github

+

upload_to_pypi:

needs: [build_wheels, build_sdist]

runs-on: ubuntu-latest

diff --git a/.gitignore b/.gitignore

index bcdc980be..5334b8efb 100644

--- a/.gitignore

+++ b/.gitignore

@@ -112,7 +112,7 @@ info/

*.cpp

# generated version file

-src/gstools/_version.py

+src/gstools_cython/_version.py

# generated docs

docs/source/examples/

diff --git a/.zenodo.json b/.zenodo.json

index ad72d74be..bb6c631c0 100755

--- a/.zenodo.json

+++ b/.zenodo.json

@@ -1,10 +1,6 @@

{

- "license": "LGPL-3.0+",

+ "license": "LGPL-3.0-or-later",

"contributors": [

- {

- "type": "Other",

- "name": "Bane Sullivan"

- },

{

"orcid": "0000-0002-2547-8102",

"affiliation": "Helmholtz Centre for Environmental Research - UFZ",

diff --git a/CHANGELOG.md b/CHANGELOG.md

index 20fb771b2..b1c868b82 100755

--- a/CHANGELOG.md

+++ b/CHANGELOG.md

@@ -1,462 +1,14 @@

# Changelog

-All notable changes to **GSTools** will be documented in this file.

+All notable changes to **GSTools-Cython** will be documented in this file.

-## [1.5.2] - Nifty Neon - 2024-05

+## [1.0.0] - 2024-07

-### Enhancements

-

-- added global variable `config.NUM_THREADS` to select number of threads for parallel computation ([#336](https://github.com/GeoStat-Framework/GSTools/pull/336))

-- speed up sampling with emcee by setting `vectorize=True` in `EnsembleSampler` ([#346](https://github.com/GeoStat-Framework/GSTools/pull/346))

-- prepare numpy 2 support ([#340](https://github.com/GeoStat-Framework/GSTools/pull/340))

- - at least numpy 2.0.0rc1 for building extensions (for Python 3.9 and above)

- - check multiple numpy and scipy versions in CI

- - fixed minimal versions for numpy

- - use `np.asarray` everywhere with `np.atleast_(n)d`

- - fix long/longlong integer issue in cython on windows by always using 64bit integers

-

-### Bugfixes

-- build docs with latest sphinx version ([#340](https://github.com/GeoStat-Framework/GSTools/pull/340))

-- fixed zero division error in spectral density of Integral model ([#347](https://github.com/GeoStat-Framework/GSTools/pull/347))

-- minor pylint fixes for used-before-assignment issues ([#350](https://github.com/GeoStat-Framework/GSTools/pull/350))

-

-### Changes

-- require pyvista 0.40 at least ([#340](https://github.com/GeoStat-Framework/GSTools/pull/340))

-- require matplotlib 3.7 at least ([#350](https://github.com/GeoStat-Framework/GSTools/pull/350))

-- remove universal2 wheels for macos (we already provide separate intel and arm64 wheels) ([#350](https://github.com/GeoStat-Framework/GSTools/pull/350))

-

-

-## [1.5.1] - Nifty Neon - 2023-11

-

-### Enhancements

-

-see [#317](https://github.com/GeoStat-Framework/GSTools/pull/317)

-

-- added wheels for Python 3.12

-- dropped support for Python 3.7 (EOL)

-- linted Cython files with cython-lint

-- use Cython 3 to build extensions

-

-

-## [1.5.0] - Nifty Neon - 2023-06

-

-### Enhancements

-- added `temporal` flag to `CovModel` to explicitly specify spatio-temporal models [#308](https://github.com/GeoStat-Framework/GSTools/pull/308)

- - rotation between spatial and temporal dimension will be ignored

- - added `spatial_dim` to `CovModel` to explicitly set spatial dimension for spatio-temporal models

- - if not using `spatial_dim`, the provided `dim` needs to include the possible temporal dimension

- - `spatial_dim` is always one less than `field_dim` for spatio-temporal models

- - also works with `latlon=True` to have a spatio-temporal model with geographic coordinates

- - all plotting routines respect this

- - the `Field` class now has a `temporal` attribute which forwards the model attribute

- - automatic variogram fitting in kriging classes for `temporal=True` and `latlon=True` will raise an error

-- added `geo_scale` to `CovModel` to have a more consistent way to set the units of the model length scale for geographic coordinates [#308](https://github.com/GeoStat-Framework/GSTools/pull/308)

- - no need to use `rescale` for this anymore (was rather a hack)

- - added `gs.KM_SCALE` which is the same as `gs.EARTH_RADIUS` for kilometer scaling

- - added `gs.DEGREE_SCALE` for great circle distance in degrees

- - added `gs.RADIAN_SCALE` for great circle distance in radians (default and previous behavior)

- - yadrenko variogram respects this and assumes the great circle distances is given in the respective unit

- - `vario_estimate` also has `geo_scale` now to control the units of the bins

-- `vario_estimate` now forwards additional kwargs to `standard_bins` (`bin_no`, `max_dist`) [#308](https://github.com/GeoStat-Framework/GSTools/pull/308)

-- added `low` and `high` arguments to `uniform` transformation [#310](https://github.com/GeoStat-Framework/GSTools/pull/310)

-

-### Changes

-- `CovModel`s expect special arguments by keyword now [#308](https://github.com/GeoStat-Framework/GSTools/pull/308)

-- always use f-strings internally [#283](https://github.com/GeoStat-Framework/GSTools/pull/283)

-- removed `verbose` attribute from `RandMeth` classes [#309](https://github.com/GeoStat-Framework/GSTools/pull/309)

-- all arguments for `RandMeth` classes key-word-only now except `model` [#309](https://github.com/GeoStat-Framework/GSTools/pull/309)

-- rename "package" to "api" in doc structure [#290](https://github.com/GeoStat-Framework/GSTools/pull/290)

-

-### Bugfixes

-- latex equations were not rendered correctly in docs [#290](https://github.com/GeoStat-Framework/GSTools/pull/290)

-

-

-## [1.4.1] - Sassy Sapphire - 2022-11

-

-### Enhancements

-- new (Exponential-) Integral model added [#243](https://github.com/GeoStat-Framework/GSTools/pull/243)

-- added wheels for Python 3.11 [#272](https://github.com/GeoStat-Framework/GSTools/pull/272)

-

-### Changes

-- API documentation is polished and fully auto-generated now [#271](https://github.com/GeoStat-Framework/GSTools/pull/271)

-

-### Bugfixes

-- fixed approximation of `Matern.spectrum` for big `nu` [#243](https://github.com/GeoStat-Framework/GSTools/pull/243)

-- GSTools had wrong version when installed from git archive [#272](https://github.com/GeoStat-Framework/GSTools/pull/272)

-- Field.plot: solve long-standing mpl slider bug [#273](https://github.com/GeoStat-Framework/GSTools/pull/273)

-

-

-## [1.4.0] - Sassy Sapphire - 2022-08

-

-### Enhancements

-- added Youtube tutorial to documentation [#239](https://github.com/GeoStat-Framework/GSTools/pull/239)

-- better support for custom generators [#250](https://github.com/GeoStat-Framework/GSTools/pull/250) [#259](https://github.com/GeoStat-Framework/GSTools/pull/259)

-- add `valid_value_types` class variable to all field classes [#250](https://github.com/GeoStat-Framework/GSTools/pull/250)

-- PyKrige: fix passed variogram in case of latlon models [#254](https://github.com/GeoStat-Framework/GSTools/pull/254)

-- add bounds checks for optional arguments of `CovModel` when resetting by class attribute [#255](https://github.com/GeoStat-Framework/GSTools/pull/255)

-- minor coverage improvements [#255](https://github.com/GeoStat-Framework/GSTools/pull/255)

-- documentation: readability improvements [#257](https://github.com/GeoStat-Framework/GSTools/pull/257)

-

-### Changes

-- drop Python 3.6 support (setuptools>60 needs py>3.7) [#241](https://github.com/GeoStat-Framework/GSTools/pull/241)

-- move `setup.cfg` content to `pyproject.toml` ([PEP 621](https://peps.python.org/pep-0621/)) [#241](https://github.com/GeoStat-Framework/GSTools/pull/241)

-- move to `src/` based package structure (better testing, building and structure) [#241](https://github.com/GeoStat-Framework/GSTools/pull/241)

-- use [extension-helpers](https://pypi.org/project/extension-helpers/) for openmp support in `setup.py` [#241](https://github.com/GeoStat-Framework/GSTools/pull/241)

-- increase minimal version of meshio to v5.1 [#241](https://github.com/GeoStat-Framework/GSTools/pull/241)

-

-### Bugfixes

-- Pyvista v0.32 deprecation warning: use point_data instead of point_arrays [#237](https://github.com/GeoStat-Framework/GSTools/pull/237)

-- remove deprecated scipy (v1.9) method pinv2 [#247](https://github.com/GeoStat-Framework/GSTools/pull/247)

-- change float comparison in tests [#248](https://github.com/GeoStat-Framework/GSTools/pull/248)

-- Cython: solve `-Wsometimes-uninitialized` warning [#255](https://github.com/GeoStat-Framework/GSTools/pull/255)

-

-

-## [1.3.5] - Pure Pink - 2022-01

-

-### Changes

-- remove caps for dependencies [#229](https://github.com/GeoStat-Framework/GSTools/pull/229)

-- build linux wheels with manylinux2014 for all versions ([CIBW v2.3.1](https://github.com/pypa/cibuildwheel/releases/tag/v2.3.1)) [#227](https://github.com/GeoStat-Framework/GSTools/pull/227)

-

-### Bugfixes

-- `Field.mesh` was not compatible with [meshio](https://github.com/nschloe/meshio) v5.1+ [#227](https://github.com/GeoStat-Framework/GSTools/pull/227)

-

-

-## [1.3.4] - Pure Pink - 2021-11

-

-### Enhancements

-- add GStools-Core as optional dependency [#215](https://github.com/GeoStat-Framework/GSTools/pull/215)

-- provide wheels for Python 3.10 [#211](https://github.com/GeoStat-Framework/GSTools/pull/211)

-- provide macOS wheels for Apple Silicon [#211](https://github.com/GeoStat-Framework/GSTools/pull/211)

-

-### Changes

-- remove unnecessary `dim` argument in Cython code [#216](https://github.com/GeoStat-Framework/GSTools/issues/216)

-

-

-## [1.3.3] - Pure Pink - 2021-08

-

-### Enhancements

-See: [#197](https://github.com/GeoStat-Framework/GSTools/issues/197)

-- `gstools.transform`:

- - add keywords `field`, `store`, `process` and `keep_mean` to all transformations to control storage and respect `normalizer`

- - added `apply_function` transformation

- - added `apply` as wrapper for all transformations

- - added `transform` method to all `Field` (sub)classes as interface to `transform.apply`

- - added checks for normal fields to work smoothly with recently added `normalizer` submodule

-- `Field`:

- - allow naming fields when generating and control storage with `store` keyword

- - all subclasses now have the `post_process` keyword (apply mean, normalizer, trend)

- - added subscription to access fields by name (`Field["field"]`)

- - added `set_pos` method to set position tuple

- - allow reusing present `pos` tuple

- - added `pos`, `mesh_type`, `field_names`, `field_shape`, `all_fields` properties

-- `CondSRF`:

- - memory optimization by forwarding `pos` from underlying `krige` instance

- - only recalculate kriging field if `pos` tuple changed (optimized ensemble generation)

-- performance improvement by using `np.asarray` instead of `np.array` where possible

-- updated examples to use new features

-- added incomplete lower gamma function `inc_gamma_low` (for TPLGaussian spectral density)

-- filter `nan` values from `cond_val` array in all kriging routines [#201](https://github.com/GeoStat-Framework/GSTools/issues/201)

-

-### Bugfixes

-- `inc_gamma` was defined wrong for integer `s < 0`

-

-

-## [1.3.2] - Pure Pink - 2021-07

-

-### Bugfixes

-- `vario_estimate` was altering the input field under certain circumstances [#180](https://github.com/GeoStat-Framework/GSTools/issues/180)

-- `emcee` v3.1 now requires `nsteps` in `run_mcmc()` to be integer (called in `RNG.sample_ln_pdf`) [#184](https://github.com/GeoStat-Framework/GSTools/pull/184)

-

-

-## [1.3.1] - Pure Pink - 2021-06

-

-### Enhancements

-- Standalone use of Field class [#166](https://github.com/GeoStat-Framework/GSTools/issues/166)

-- add social badges in README [#169](https://github.com/GeoStat-Framework/GSTools/issues/169), [#170](https://github.com/GeoStat-Framework/GSTools/issues/170)

-

-### Bugfixes

-- use `oldest-supported-numpy` to build cython extensions [#165](https://github.com/GeoStat-Framework/GSTools/pull/165)

-

-

-## [1.3.0] - Pure Pink - 2021-04

-

-### Topics

-

-#### Geographical Coordinates Support ([#113](https://github.com/GeoStat-Framework/GSTools/issues/113))

-- added boolean init parameter `latlon` to indicate a geographic model. When given, spatial dimension is fixed to `dim=3`, `anis` and `angles` will be ignored, since anisotropy is not well-defined on a sphere.

-- add property `field_dim` to indicate the dimension of the resulting field. Will be 2 if `latlon=True`

-- added yadrenko variogram, covariance and correlation method, since the geographic models are derived from standard models in 3D by plugging in the chordal distance of two points on a sphere derived from there great-circle distance `zeta`:

- - `vario_yadrenko`: given by `variogram(2 * np.sin(zeta / 2))`

- - `cov_yadrenko`: given by `covariance(2 * np.sin(zeta / 2))`

- - `cor_yadrenko`: given by `correlation(2 * np.sin(zeta / 2))`

-- added plotting routines for yadrenko methods described above

-- the `isometrize` and `anisometrize` methods will convert `latlon` tuples (given in degree) to points on the unit-sphere in 3D and vice versa

-- representation of geographical models don't display the `dim`, `anis` and `angles` parameters, but `latlon=True`

-- `fit_variogram` will expect an estimated variogram with great-circle distances given in radians

-- **Variogram estimation**

- - `latlon` switch implemented in `estimate_vario` routine

- - will return a variogram estimated by the great-circle distance (haversine formula) given in radians

-- **Field**

- - added plotting routines for latlon fields

- - no vector fields possible on latlon fields

- - corretly handle pos tuple for latlon fields

-

-#### Krige Unification ([#97](https://github.com/GeoStat-Framework/GSTools/issues/97))

-- Swiss Army Knife for kriging: The `Krige` class now provides everything in one place

-- "Kriging the mean" is now possible with the switch `only_mean` in the call routine

-- `Simple`/`Ordinary`/`Universal`/`ExtDrift`/`Detrended` are only shortcuts to `Krige` with limited input parameter list

-- We now use the `covariance` function to build up the kriging matrix (instead of variogram)

-- An `unbiased` switch was added to enable simple kriging (where the unbiased condition is not given)

-- An `exact` switch was added to allow smother results, if a `nugget` is present in the model

-- An `cond_err` parameter was added, where measurement error variances can be given for each conditional point

-- pseudo-inverse matrix is now used to solve the kriging system (can be disabled by the new switch `pseudo_inv`), this is equal to solving the system with least-squares and prevents numerical errors

-- added options `fit_normalizer` and `fit_variogram` to automatically fit normalizer and variogram to given data

-

-#### Directional Variograms and Auto-binning ([#87](https://github.com/GeoStat-Framework/GSTools/issues/87), [#106](https://github.com/GeoStat-Framework/GSTools/issues/106), [#131](https://github.com/GeoStat-Framework/GSTools/issues/131))

-- new routine name `vario_estimate` instead of `vario_estimate_unstructured` (old kept for legacy code) for simplicity

-- new routine name `vario_estimate_axis` instead of `vario_estimate_structured` (old kept for legacy code) for simplicity

-- **vario_estimate**

- - added simple automatic binning routine to determine bins from given data (one third of box diameter as max bin distance, sturges rule for number of bins)

- - allow to pass multiple fields for joint variogram estimation (e.g. for daily precipitation) on same mesh

- - `no_data` option added to allow missing values

- - **masked fields**

- - user can now pass a masked array (or a list of masked arrays) to deselect data points.

- - in addition, a `mask` keyword was added to provide an external mask

- - **directional variograms**

- - diretional variograms can now be estimated

- - either provide a list of direction vectors or angles for directions (spherical coordinates)

- - can be controlled by given angle tolerance and (optional) bandwidth

- - prepared for nD

- - structured fields (pos tuple describes axes) can now be passed to estimate an isotropic or directional variogram

- - distance calculation in cython routines in now independent of dimension

-- **vario_estimate_axis**

- - estimation along array axis now possible in arbitrary dimensions

- - `no_data` option added to allow missing values (sovles [#83](https://github.com/GeoStat-Framework/GSTools/issues/83))

- - axis can be given by name (`"x"`, `"y"`, `"z"`) or axis number (`0`, `1`, `2`, `3`, ...)

-

-#### Better Variogram fitting ([#78](https://github.com/GeoStat-Framework/GSTools/issues/78), [#145](https://github.com/GeoStat-Framework/GSTools/pull/145))

-- fixing sill possible now

-- `loss` is now selectable for smoother handling of outliers

-- r2 score can now be returned to get an impression of the goodness of fitting

-- weights can be passed

-- instead of deselecting parameters, one can also give fix values for each parameter

-- default init guess for `len_scale` is now mean of given bin-centers

-- default init guess for `var` and `nugget` is now mean of given variogram values

-

-#### CovModel update ([#109](https://github.com/GeoStat-Framework/GSTools/issues/109), [#122](https://github.com/GeoStat-Framework/GSTools/issues/122), [#157](https://github.com/GeoStat-Framework/GSTools/pull/157))

-- add new `rescale` argument and attribute to the `CovModel` class to be able to rescale the `len_scale` (usefull for unit conversion or rescaling `len_scale` to coincide with the `integral_scale` like it's the case with the Gaussian model)

- See: [#90](https://github.com/GeoStat-Framework/GSTools/issues/90), [GeoStat-Framework/PyKrige#119](https://github.com/GeoStat-Framework/PyKrige/issues/119)

-- added new `len_rescaled` attribute to the `CovModel` class, which is the rescaled `len_scale`: `len_rescaled = len_scale / rescale`

-- new method `default_rescale` to provide default rescale factor (can be overridden)

-- remove `doctest` calls

-- docstring updates in `CovModel` and derived models

-- updated all models to use the `cor` routine and make use of the `rescale` argument (See: [#90](https://github.com/GeoStat-Framework/GSTools/issues/90))

-- TPL models got a separate base class to not repeat code

-- added **new models** (See: [#88](https://github.com/GeoStat-Framework/GSTools/issues/88)):

- - `HyperSpherical`: (Replaces the old `Intersection` model) Derived from the intersection of hyper-spheres in arbitrary dimensions. Coincides with the linear model in 1D, the circular model in 2D and the classical spherical model in 3D

- - `SuperSpherical`: like the HyperSpherical, but the shape parameter derived from dimension can be set by the user. Coincides with the HyperSpherical model by default

- - `JBessel`: a hole model valid in all dimensions. The shape parameter controls the dimension it was derived from. For `nu=0.5` this model coincides with the well known `wave` hole model.

- - `TPLSimple`: a simple truncated power law controlled by a shape parameter `nu`. Coincides with the truncated linear model for `nu=1`

- - `Cubic`: to be compatible with scikit-gstat in the future

-- all arguments are now stored as float internally ([#157](https://github.com/GeoStat-Framework/GSTools/pull/157))

-- string representation of the `CovModel` class is now using a float precision (`CovModel._prec=3`) to truncate longish output

-- string representation of the `CovModel` class now only shows `anis` and `angles` if model is anisotropic resp. rotated

-- dimension validity check: raise a warning, if given model is not valid in the desired dimension (See: [#86](https://github.com/GeoStat-Framework/GSTools/issues/86))

-

-#### Normalizer, Trend and Mean ([#124](https://github.com/GeoStat-Framework/GSTools/issues/124))

-

-- new `normalize` submodule containing power-transforms for data to gain normality

-- Base-Class: `Normalizer` providing basic functionality including maximum likelihood fitting

-- added: `LogNormal`, `BoxCox`, `BoxCoxShift`, `YeoJohnson`, `Modulus` and `Manly`

-- normalizer, trend and mean can be passed to SRF, Krige and variogram estimation routines

- - A trend can be a callable function, that represents a trend in input data. For example a linear decrease of temperature with height.

- - The normalizer will be applied after the data was detrended, i.e. the trend was substracted from the data, in order to gain normality.

- - The mean is now interpreted as the mean of the normalized data. The user could also provide a callable mean, but it is mostly meant to be constant.

-

-#### Arbitrary dimensions ([#112](https://github.com/GeoStat-Framework/GSTools/issues/112))

-- allow arbitrary dimensions in all routines (CovModel, Krige, SRF, variogram)

-- anisotropy and rotation following a generalization of tait-bryan angles

-- `CovModel` provides `isometrize` and `anisometrize` routines to convert points

-

-#### New Class for Conditioned Random Fields ([#130](https://github.com/GeoStat-Framework/GSTools/issues/130))

-- **THIS BREAKS BACKWARD COMPATIBILITY**

-- `CondSRF` replaces the conditioning feature of the SRF class, which was cumbersome and limited to Ordinary and Simple kriging

-- `CondSRF` behaves similar to the `SRF` class, but instead of a covariance model, it takes a kriging class as input. With this kriging class, all conditioning related settings are defined.

-

-### Enhancements

-- Python 3.9 Support [#107](https://github.com/GeoStat-Framework/GSTools/issues/107)

-- add routines to format struct. pos tuple by given `dim` or `shape`

-- add routine to format struct. pos tuple by given `shape` (variogram helper)

-- remove `field.tools` subpackage

-- support `meshio>=4.0` and add as dependency

-- PyVista mesh support [#59](https://github.com/GeoStat-Framework/GSTools/issues/59)

-- added `EARTH_RADIUS` as constant providing earths radius in km (can be used to rescale models)

-- add routines `latlon2pos` and `pos2latlon` to convert lat-lon coordinates to points on unit-sphere and vice versa

-- a lot of new examples and tutorials

-- `RandMeth` class got a switch to select the sampling strategy

-- plotter for n-D fields added [#141](https://github.com/GeoStat-Framework/GSTools/issues/141)

-- antialias for contour plots of 2D fields [#141](https://github.com/GeoStat-Framework/GSTools/issues/141)

-- building from source is now configured with `pyproject.toml` to care about build dependencies, see [#154](https://github.com/GeoStat-Framework/GSTools/issues/154)

+First release of GSTools-Cython

### Changes

-- drop support for Python 3.5 [#146](https://github.com/GeoStat-Framework/GSTools/pull/146)

-- added a finit limit for shape-parameters in some `CovModel`s [#147](https://github.com/GeoStat-Framework/GSTools/pull/147)

-- drop usage of `pos2xyz` and `xyz2pos`

-- remove structured option from generators (structured pos need to be converted first)

-- explicitly assert dim=2,3 when generating vector fields

-- simplify `pre_pos` routine to save pos tuple and reformat it an unstructured tuple

-- simplify field shaping

-- simplify plotting routines

-- only the `"unstructured"` keyword is recognized everywhere, everything else is interpreted as `"structured"` (e.g. `"rectilinear"`)

-- use GitHub-Actions instead of TravisCI

-- parallel build now controlled by env-var `GSTOOLS_BUILD_PARALLEL=1`, see [#154](https://github.com/GeoStat-Framework/GSTools/issues/154)

-- install extra target for `[dev]` dropped, can be reproduced by `pip install gstools[test, doc]`, see [#154](https://github.com/GeoStat-Framework/GSTools/issues/154)

-

-### Bugfixes

-- typo in keyword argument for vario_estimate_structured [#80](https://github.com/GeoStat-Framework/GSTools/issues/80)

-- isotropic rotation of SRF was not possible [#100](https://github.com/GeoStat-Framework/GSTools/issues/100)

-- `CovModel.opt_arg` now sorted [#103](https://github.com/GeoStat-Framework/GSTools/issues/103)

-- `CovModel.fit`: check if weights are given as a string (numpy comparison error) [#111](https://github.com/GeoStat-Framework/GSTools/issues/111)

-- several pylint fixes ([#159](https://github.com/GeoStat-Framework/GSTools/pull/159))

-

-

-## [1.2.1] - Volatile Violet - 2020-04-14

-

-### Bugfixes

-- `ModuleNotFoundError` is not present in py35

-- Fixing Cressie-Bug #76

-- Adding analytical formula for integral scales of rational and stable model

-- remove prange from IncomprRandMeth summators to prevent errors on Win and macOS

-

-

-## [1.2.0] - Volatile Violet - 2020-03-20

-

-### Enhancements

-- different variogram estimator functions can now be used #51

-- the TPLGaussian and TPLExponential now have analytical spectra #67

-- added property `is_isotropic` to `CovModel` #67

-- reworked the whole krige sub-module to provide multiple kriging methods #67

- - Simple

- - Ordinary

- - Universal

- - External Drift Kriging

- - Detrended Kriging

-- a new transformation function for discrete fields has been added #70

-- reworked tutorial section in the documentation #63

-- pyvista interface #29

-

-### Changes

-- Python versions 2.7 and 3.4 are no longer supported #40 #43

-- `CovModel`: in 3D the input of anisotropy is now treated slightly different: #67

- - single given anisotropy value [e] is converted to [1, e] (it was [e, e] before)

- - two given length-scales [l_1, l_2] are converted to [l_1, l_2, l_2] (it was [l_1, l_2, l_1] before)

-

-### Bugfixes

-- a race condition in the structured variogram estimation has been fixed #51

-

-

-## [1.1.1] - Reverberating Red - 2019-11-08

-

-### Enhancements

-- added a changelog. See: [commit fbea883](https://github.com/GeoStat-Framework/GSTools/commit/fbea88300d0862393e52f4b7c3d2b15c2039498b)

-

-### Changes

-- deprecation warnings are now printed if Python versions 2.7 or 3.4 are used #40 #41

-

-### Bugfixes

-- define spectral_density instead of spectrum in covariance models since Cov-base derives spectrum. See: [commit 00f2747](https://github.com/GeoStat-Framework/GSTools/commit/00f2747fd0503ff8806f2eebfba36acff813416b)

-- better boundaries for `CovModel` parameters. See: https://github.com/GeoStat-Framework/GSTools/issues/37

-

-

-## [1.1.0] - Reverberating Red - 2019-10-01

-

-### Enhancements

-- by using Cython for all the heavy computations, we could achieve quite some speed ups and reduce the memory consumption significantly #16

-- parallel computation in Cython is now supported with the help of OpenMP and the performance increase is nearly linear with increasing cores #16

-- new submodule `krige` providing simple (known mean) and ordinary (estimated mean) kriging working analogous to the srf class

-- interface to pykrige to use the gstools `CovModel` with the pykrige routines (https://github.com/bsmurphy/PyKrige/issues/124)

-- the srf class now provides a `plot` and a `vtk_export` routine

-- incompressible flow fields can now be generated #14

-- new submodule providing several field transformations like: Zinn&Harvey, log-normal, bimodal, ... #13

-- Python 3.4 and 3.7 wheel support #19

-- field can now be generated directly on meshes from [meshio](https://github.com/nschloe/meshio) and [ogs5py](https://github.com/GeoStat-Framework/ogs5py), see: [commit f4a3439](https://github.com/GeoStat-Framework/GSTools/commit/f4a3439400b81d8d9db81a5f7fbf6435f603cf05)

-- the srf and kriging classes now store the last `pos`, `mesh_type` and `field` values to keep them accessible, see: [commit 29f7f1b](https://github.com/GeoStat-Framework/GSTools/commit/29f7f1b029866379ce881f44765f72534d757fae)

-- tutorials on all important features of GSTools have been written for you guys #20

-- a new interface to pyvista is provided to export fields to python vtk representation, which can be used for plotting, exploring and exporting fields #29

-

-### Changes

-- the license was changed from GPL to LGPL in order to promote the use of this library #25

-- the rotation angles are now interpreted in positive direction (counter clock wise)

-- the `force_moments` keyword was removed from the SRF call method, it is now in provided as a field transformation #13

-- drop support of python implementations of the variogram estimators #18

-- the `variogram_normed` method was removed from the `CovModel` class due to redundance [commit 25b1647](https://github.com/GeoStat-Framework/GSTools/commit/25b164722ac6744ebc7e03f3c0bf1c30be1eba89)

-- the position vector of 1D fields does not have to be provided in a list-like object with length 1 [commit a6f5be8](https://github.com/GeoStat-Framework/GSTools/commit/a6f5be8bfd2db1f002e7889ecb8e9a037ea08886)

-

-### Bugfixes

-- several minor bugfixes

-

-

-## [1.0.1] - Bouncy Blue - 2019-01-18

-

-### Bugfixes

-- fixed Numpy and Cython version during build process

-

-

-## [1.0.0] - Bouncy Blue - 2019-01-16

-

-### Enhancements

-- added a new covariance class, which allows the easy usage of arbitrary covariance models

-- added many predefined covariance models, including truncated power law models

-- added [tutorials](https://geostat-framework.readthedocs.io/projects/gstools/en/latest/tutorials.html) and examples, showing and explaining the main features of GSTools

-- variogram models can be fitted to data

-- prebuilt binaries for many Linux distributions, Mac OS and Windows, making the installation, especially of the Cython code, much easier

-- the generated fields can now easily be exported to vtk files

-- variance scaling is supported for coarser grids

-- added pure Python versions of the variogram estimators, in case somebody has problems compiling Cython code

-- the [documentation](https://geostat-framework.readthedocs.io/projects/gstools/en/latest/) is now a lot cleaner and easier to use

-- the code is a lot cleaner and more consistent now

-- unit tests are now automatically tested when new code is pushed

-- test coverage of code is shown

-- GeoStat Framework now has a website, visit us: https://geostat-framework.github.io/

-

-### Changes

-- release is not downwards compatible with release v0.4.0

-- SRF creation has been adapted for the `CovModel`

-- a tuple `pos` is now used instead of `x`, `y`, and `z` for the axes

-- renamed `estimate_unstructured` and `estimate_structured` to `vario_estimate_unstructured` and `vario_estimate_structured` for less ambiguity

-

-### Bugfixes

-- several minor bugfixes

-

-

-## [0.4.0] - Glorious Green - 2018-07-17

-

-### Bugfixes

-- import of cython functions put into a try-block

-

-

-## [0.3.6] - Original Orange - 2018-07-17

-

-First release of GSTools.

+- moved Cython files into this separate package

-[Unreleased]: https://github.com/GeoStat-Framework/gstools/compare/v1.5.2...HEAD

-[1.5.2]: https://github.com/GeoStat-Framework/gstools/compare/v1.5.1...v1.5.2

-[1.5.1]: https://github.com/GeoStat-Framework/gstools/compare/v1.5.0...v1.5.1

-[1.5.0]: https://github.com/GeoStat-Framework/gstools/compare/v1.4.1...v1.5.0

-[1.4.1]: https://github.com/GeoStat-Framework/gstools/compare/v1.4.0...v1.4.1

-[1.4.0]: https://github.com/GeoStat-Framework/gstools/compare/v1.3.5...v1.4.0

-[1.3.5]: https://github.com/GeoStat-Framework/gstools/compare/v1.3.4...v1.3.5

-[1.3.4]: https://github.com/GeoStat-Framework/gstools/compare/v1.3.3...v1.3.4

-[1.3.3]: https://github.com/GeoStat-Framework/gstools/compare/v1.3.2...v1.3.3

-[1.3.2]: https://github.com/GeoStat-Framework/gstools/compare/v1.3.1...v1.3.2

-[1.3.1]: https://github.com/GeoStat-Framework/gstools/compare/v1.3.0...v1.3.1

-[1.3.0]: https://github.com/GeoStat-Framework/gstools/compare/v1.2.1...v1.3.0

-[1.2.1]: https://github.com/GeoStat-Framework/gstools/compare/v1.2.0...v1.2.1

-[1.2.0]: https://github.com/GeoStat-Framework/gstools/compare/v1.1.1...v1.2.0

-[1.1.1]: https://github.com/GeoStat-Framework/gstools/compare/v1.1.0...v1.1.1

-[1.1.0]: https://github.com/GeoStat-Framework/gstools/compare/v1.0.1...v1.1.0

-[1.0.1]: https://github.com/GeoStat-Framework/gstools/compare/v1.0.0...v1.0.1

-[1.0.0]: https://github.com/GeoStat-Framework/gstools/compare/0.4.0...v1.0.0

-[0.4.0]: https://github.com/GeoStat-Framework/gstools/compare/0.3.6...0.4.0

-[0.3.6]: https://github.com/GeoStat-Framework/gstools/releases/tag/0.3.6

+[Unreleased]: https://github.com/GeoStat-Framework/gstools-cython/compare/v1.0.0...HEAD

+[1.0.0]: https://github.com/GeoStat-Framework/gstools-cython/releases/tag/v1.0.0

diff --git a/MANIFEST.in b/MANIFEST.in

index 24184482a..5778d3fa3 100644

--- a/MANIFEST.in

+++ b/MANIFEST.in

@@ -1,4 +1,4 @@

prune **

recursive-include tests *.py

-recursive-include src/gstools *.py *.pyx

+recursive-include src/gstools_cython *.py *.pyx

include AUTHORS.md LICENSE README.md pyproject.toml setup.py

diff --git a/README.md b/README.md

index 6cb699019..fcc9ed143 100644

--- a/README.md

+++ b/README.md

@@ -1,11 +1,8 @@

-# Welcome to GSTools

+# Welcome to GSTools-Cython

[](https://doi.org/10.5194/gmd-15-3161-2022)

-[](https://doi.org/10.5281/zenodo.1313628)

-[](https://badge.fury.io/py/gstools)

-[](https://anaconda.org/conda-forge/gstools)

-[](https://github.com/GeoStat-Framework/GSTools/actions)

-[](https://coveralls.io/github/GeoStat-Framework/GSTools?branch=main)

-[](https://geostat-framework.readthedocs.io/projects/gstools/en/stable/?badge=stable)

+[](https://github.com/GeoStat-Framework/GSTools-Cython/actions)

+[](https://coveralls.io/github/GeoStat-Framework/GSTools-Cython?branch=main)

+[](https://geostat-framework.readthedocs.io/projects/gstools-cython/en/stable/?badge=stable)

[](https://github.com/ambv/black)

@@ -15,36 +12,10 @@

Get in Touch!

-

- -

-

-Youtube Tutorial on GSTools

-

-

-

- -

-

-

-

-

-## Purpose

-

- -

-GeoStatTools provides geostatistical tools for various purposes:

-- random field generation

-- simple, ordinary, universal and external drift kriging

-- conditioned field generation

-- incompressible random vector field generation

-- (automated) variogram estimation and fitting

-- directional variogram estimation and modelling

-- data normalization and transformation

-- many readily provided and even user-defined covariance models

-- metric spatio-temporal modelling

-- plotting and exporting routines

-

## Installation

@@ -88,275 +59,19 @@ You can cite the Zenodo code publication of GSTools by:

If you want to cite a specific version, have a look at the [Zenodo site](https://doi.org/10.5281/zenodo.1313628).

-## Documentation for GSTools

-

-You can find the documentation under [geostat-framework.readthedocs.io][doc_link].

-

-

-### Tutorials and Examples

-

-The documentation also includes some [tutorials][tut_link], showing the most important use cases of GSTools, which are

-

-- [Random Field Generation][tut1_link]

-- [The Covariance Model][tut2_link]

-- [Variogram Estimation][tut3_link]

-- [Random Vector Field Generation][tut4_link]

-- [Kriging][tut5_link]

-- [Conditioned random field generation][tut6_link]

-- [Field transformations][tut7_link]

-- [Geographic Coordinates][tut8_link]

-- [Spatio-Temporal Modelling][tut9_link]

-- [Normalizing Data][tut10_link]

-- [Miscellaneous examples][tut0_link]

-

-The associated python scripts are provided in the `examples` folder.

-

-

-## Spatial Random Field Generation

-

-The core of this library is the generation of spatial random fields. These fields are generated using the randomisation method, described by [Heße et al. 2014][rand_link].

-

-[rand_link]: https://doi.org/10.1016/j.envsoft.2014.01.013

-

-

-### Examples

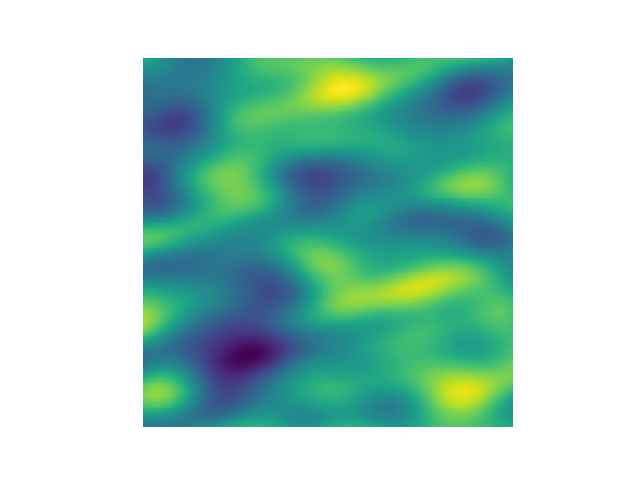

-

-#### Gaussian Covariance Model

-

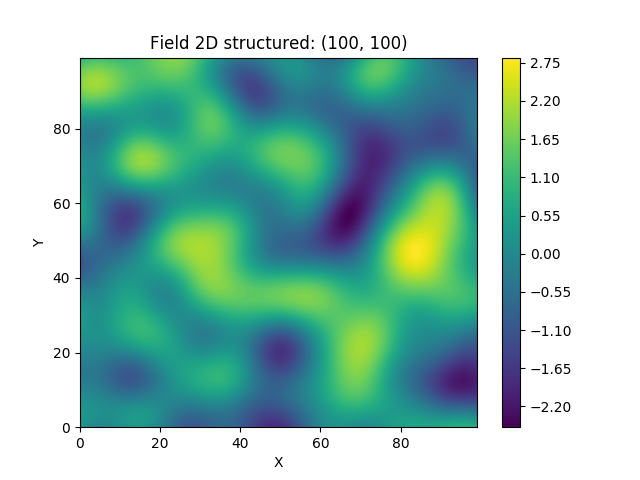

-This is an example of how to generate a 2 dimensional spatial random field with a gaussian covariance model.

+## Documentation

-```python

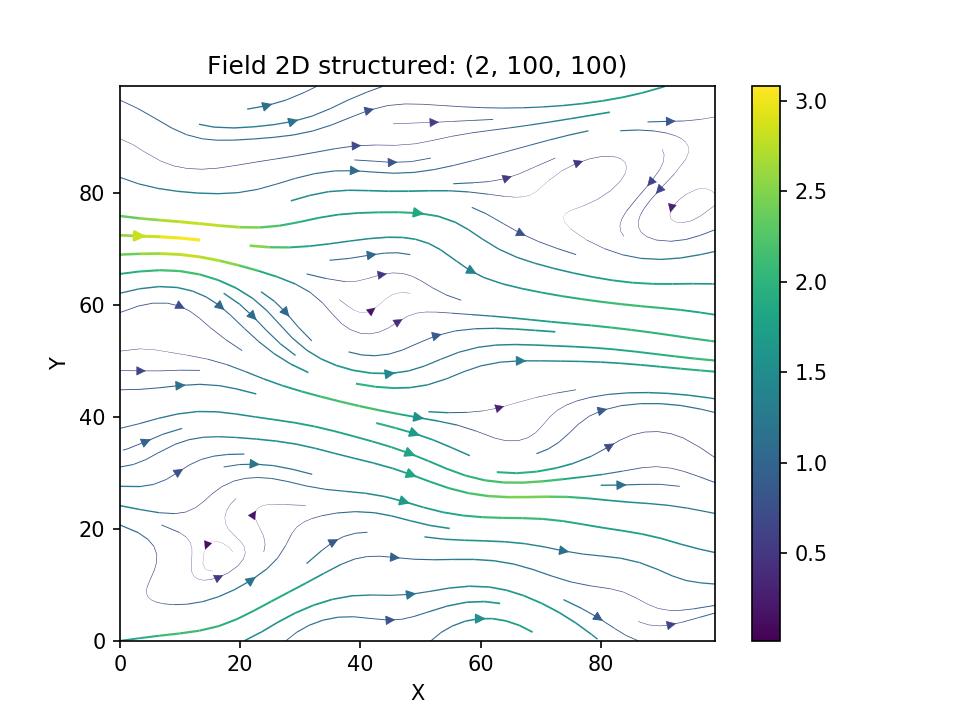

-import gstools as gs

-# structured field with a size 100x100 and a grid-size of 1x1

-x = y = range(100)

-model = gs.Gaussian(dim=2, var=1, len_scale=10)

-srf = gs.SRF(model)

-srf((x, y), mesh_type='structured')

-srf.plot()

-```

-

-

-GeoStatTools provides geostatistical tools for various purposes:

-- random field generation

-- simple, ordinary, universal and external drift kriging

-- conditioned field generation

-- incompressible random vector field generation

-- (automated) variogram estimation and fitting

-- directional variogram estimation and modelling

-- data normalization and transformation

-- many readily provided and even user-defined covariance models

-- metric spatio-temporal modelling

-- plotting and exporting routines

-

## Installation

@@ -88,275 +59,19 @@ You can cite the Zenodo code publication of GSTools by:

If you want to cite a specific version, have a look at the [Zenodo site](https://doi.org/10.5281/zenodo.1313628).

-## Documentation for GSTools

-

-You can find the documentation under [geostat-framework.readthedocs.io][doc_link].

-

-

-### Tutorials and Examples

-

-The documentation also includes some [tutorials][tut_link], showing the most important use cases of GSTools, which are

-

-- [Random Field Generation][tut1_link]

-- [The Covariance Model][tut2_link]

-- [Variogram Estimation][tut3_link]

-- [Random Vector Field Generation][tut4_link]

-- [Kriging][tut5_link]

-- [Conditioned random field generation][tut6_link]

-- [Field transformations][tut7_link]

-- [Geographic Coordinates][tut8_link]

-- [Spatio-Temporal Modelling][tut9_link]

-- [Normalizing Data][tut10_link]

-- [Miscellaneous examples][tut0_link]

-

-The associated python scripts are provided in the `examples` folder.

-

-

-## Spatial Random Field Generation

-

-The core of this library is the generation of spatial random fields. These fields are generated using the randomisation method, described by [Heße et al. 2014][rand_link].

-

-[rand_link]: https://doi.org/10.1016/j.envsoft.2014.01.013

-

-

-### Examples

-

-#### Gaussian Covariance Model

-

-This is an example of how to generate a 2 dimensional spatial random field with a gaussian covariance model.

+## Documentation

-```python

-import gstools as gs

-# structured field with a size 100x100 and a grid-size of 1x1

-x = y = range(100)

-model = gs.Gaussian(dim=2, var=1, len_scale=10)

-srf = gs.SRF(model)

-srf((x, y), mesh_type='structured')

-srf.plot()

-```

-

- -

-

-

-GSTools also provides support for [geographic coordinates](https://en.wikipedia.org/wiki/Geographic_coordinate_system).

-This works perfectly well with [cartopy](https://scitools.org.uk/cartopy/docs/latest/index.html).

-

-```python

-import matplotlib.pyplot as plt

-import cartopy.crs as ccrs

-import gstools as gs

-# define a structured field by latitude and longitude

-lat = lon = range(-80, 81)

-model = gs.Gaussian(latlon=True, len_scale=777, geo_scale=gs.KM_SCALE)

-srf = gs.SRF(model, seed=12345)

-field = srf.structured((lat, lon))

-# Orthographic plotting with cartopy

-ax = plt.subplot(projection=ccrs.Orthographic(-45, 45))

-cont = ax.contourf(lon, lat, field, transform=ccrs.PlateCarree())

-ax.coastlines()

-ax.set_global()

-plt.colorbar(cont)

-```

-

-

- -

-

+- GSTools: https://https://gstools.readthedocs.io/

+- GSTools-Cython: https://https://gstools-cython.readthedocs.io/

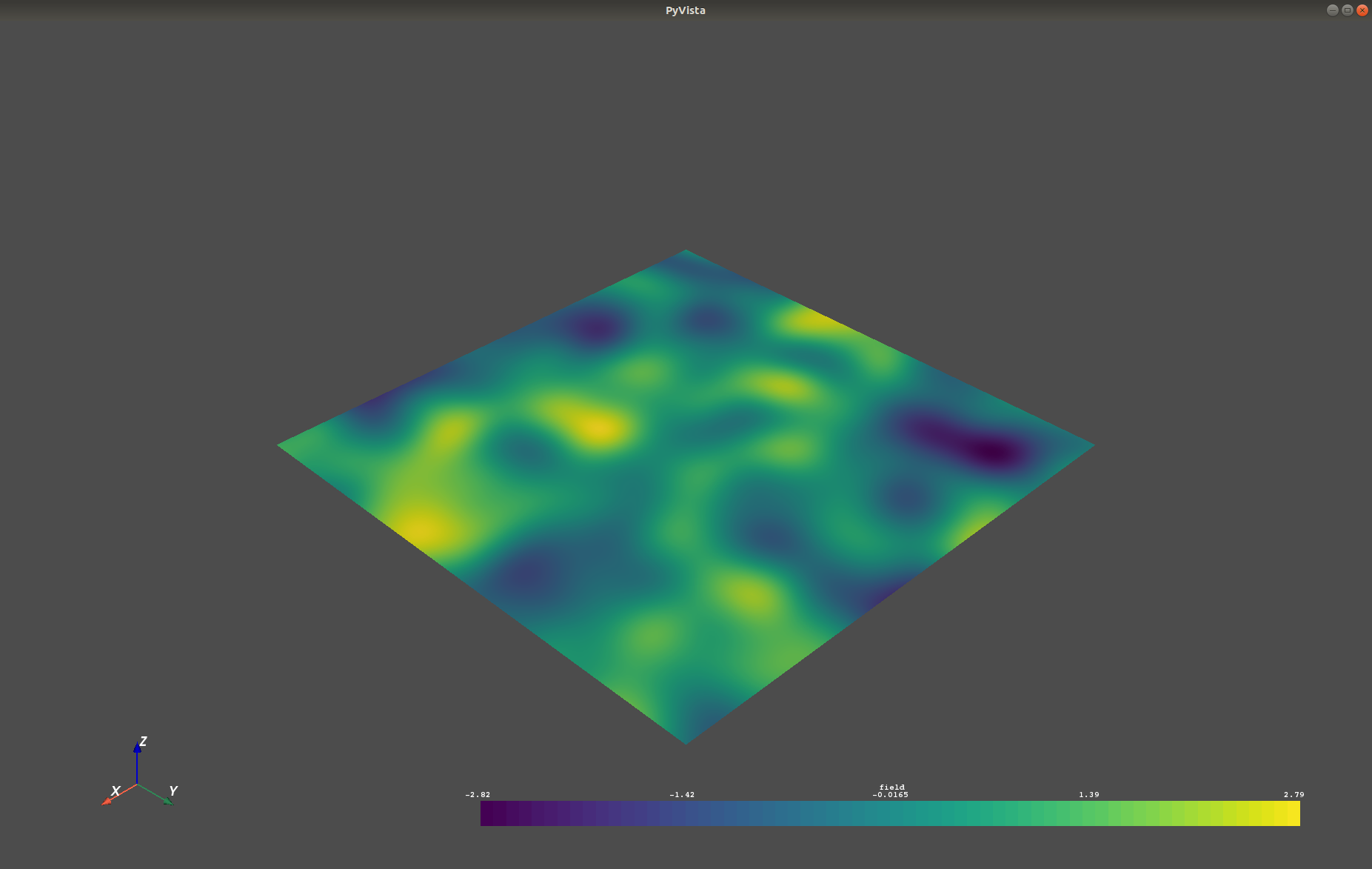

-A similar example but for a three dimensional field is exported to a [VTK](https://vtk.org/) file, which can be visualized with [ParaView](https://www.paraview.org/) or [PyVista](https://docs.pyvista.org) in Python:

-

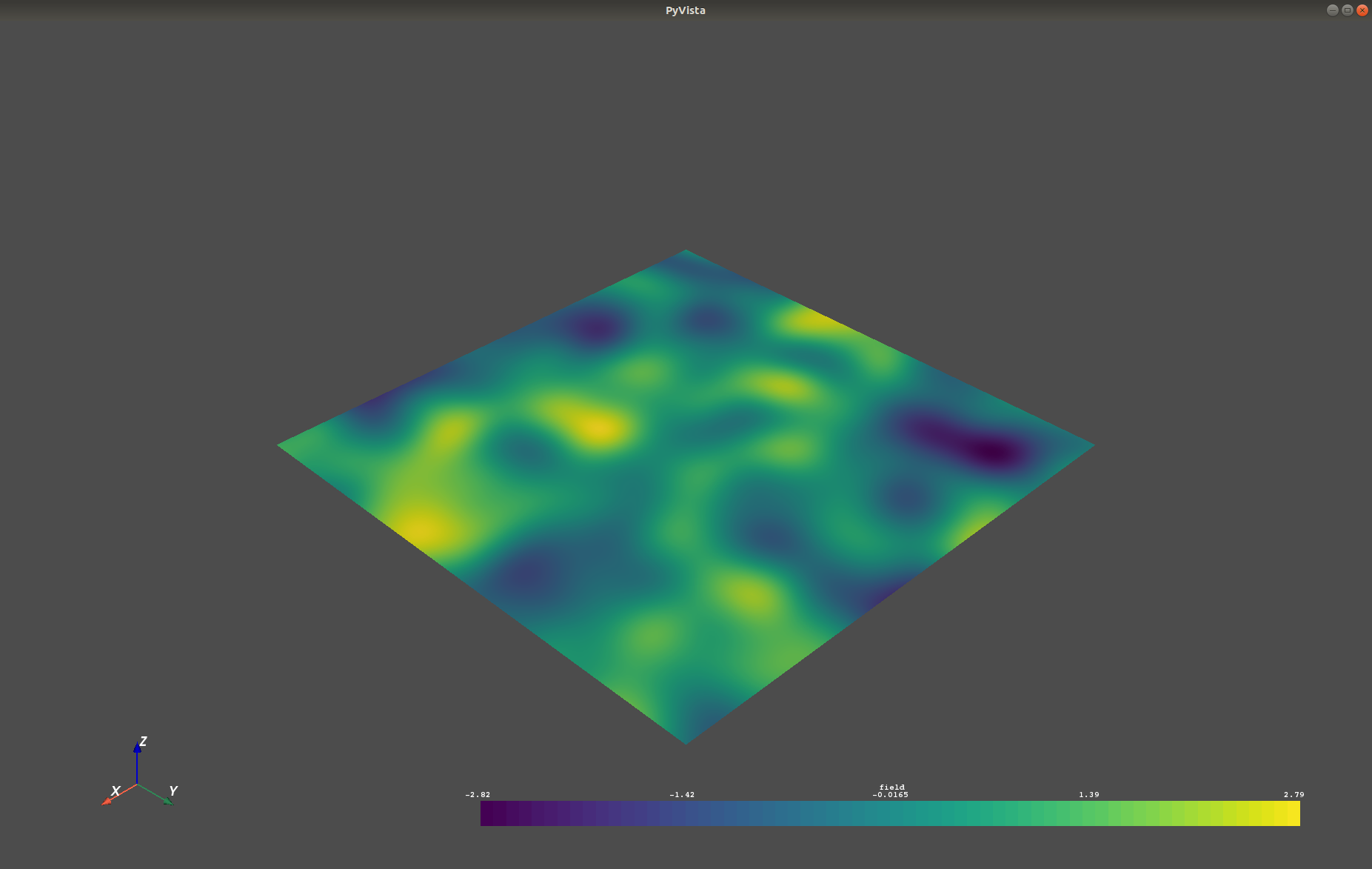

-```python

-import gstools as gs

-# structured field with a size 100x100x100 and a grid-size of 1x1x1

-x = y = z = range(100)

-model = gs.Gaussian(dim=3, len_scale=[16, 8, 4], angles=(0.8, 0.4, 0.2))

-srf = gs.SRF(model)

-srf((x, y, z), mesh_type='structured')

-srf.vtk_export('3d_field') # Save to a VTK file for ParaView

-

-mesh = srf.to_pyvista() # Create a PyVista mesh for plotting in Python

-mesh.contour(isosurfaces=8).plot()

-```

-

-

- -

-

+## Cython backend

+This package is the cython backend implementation for GSTools.

-## Estimating and Fitting Variograms

-

-The spatial structure of a field can be analyzed with the variogram, which contains the same information as the covariance function.

-

-All covariance models can be used to fit given variogram data by a simple interface.

-

-### Example

-

-This is an example of how to estimate the variogram of a 2 dimensional unstructured field and estimate the parameters of the covariance

-model again.

-

-```python

-import numpy as np

-import gstools as gs

-# generate a synthetic field with an exponential model

-x = np.random.RandomState(19970221).rand(1000) * 100.

-y = np.random.RandomState(20011012).rand(1000) * 100.

-model = gs.Exponential(dim=2, var=2, len_scale=8)

-srf = gs.SRF(model, mean=0, seed=19970221)

-field = srf((x, y))

-# estimate the variogram of the field

-bin_center, gamma = gs.vario_estimate((x, y), field)

-# fit the variogram with a stable model. (no nugget fitted)

-fit_model = gs.Stable(dim=2)

-fit_model.fit_variogram(bin_center, gamma, nugget=False)

-# output

-ax = fit_model.plot(x_max=max(bin_center))

-ax.scatter(bin_center, gamma)

-print(fit_model)

-```

-

-Which gives:

-

-```python

-Stable(dim=2, var=1.85, len_scale=7.42, nugget=0.0, anis=[1.0], angles=[0.0], alpha=1.09)

-```

-

-

- -

-

-

-## Kriging and Conditioned Random Fields

-

-An important part of geostatistics is Kriging and conditioning spatial random

-fields to measurements. With conditioned random fields, an ensemble of field realizations with their variability depending on the proximity of the measurements can be generated.

-

-### Example

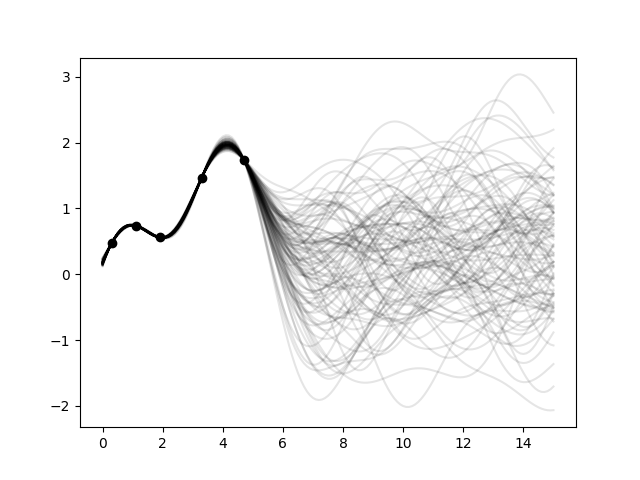

-For better visualization, we will condition a 1d field to a few "measurements", generate 100 realizations and plot them:

-

-```python

-import numpy as np

-import matplotlib.pyplot as plt

-import gstools as gs

-

-# conditions

-cond_pos = [0.3, 1.9, 1.1, 3.3, 4.7]

-cond_val = [0.47, 0.56, 0.74, 1.47, 1.74]

-

-# conditioned spatial random field class

-model = gs.Gaussian(dim=1, var=0.5, len_scale=2)

-krige = gs.krige.Ordinary(model, cond_pos, cond_val)

-cond_srf = gs.CondSRF(krige)

-# same output positions for all ensemble members

-grid_pos = np.linspace(0.0, 15.0, 151)

-cond_srf.set_pos(grid_pos)

-

-# seeded ensemble generation

-seed = gs.random.MasterRNG(20170519)

-for i in range(100):

- field = cond_srf(seed=seed(), store=f"field_{i}")

- plt.plot(grid_pos, field, color="k", alpha=0.1)

-plt.scatter(cond_pos, cond_val, color="k")

-plt.show()

-```

-

-

- -

-

-

-## User Defined Covariance Models

-

-One of the core-features of GSTools is the powerful

-[CovModel][cov_link]

-class, which allows to easy define covariance models by the user.

-

-### Example

-

-Here we re-implement the Gaussian covariance model by defining just a

-[correlation][cor_link] function, which takes a non-dimensional distance ``h = r/l``:

-

-```python

-import numpy as np

-import gstools as gs

-# use CovModel as the base-class

-class Gau(gs.CovModel):

- def cor(self, h):

- return np.exp(-h**2)

-```

-

-And that's it! With ``Gau`` you now have a fully working covariance model,

-which you could use for field generation or variogram fitting as shown above.

-

-Have a look at the [documentation ][doc_link] for further information on incorporating

-optional parameters and optimizations.

-

-

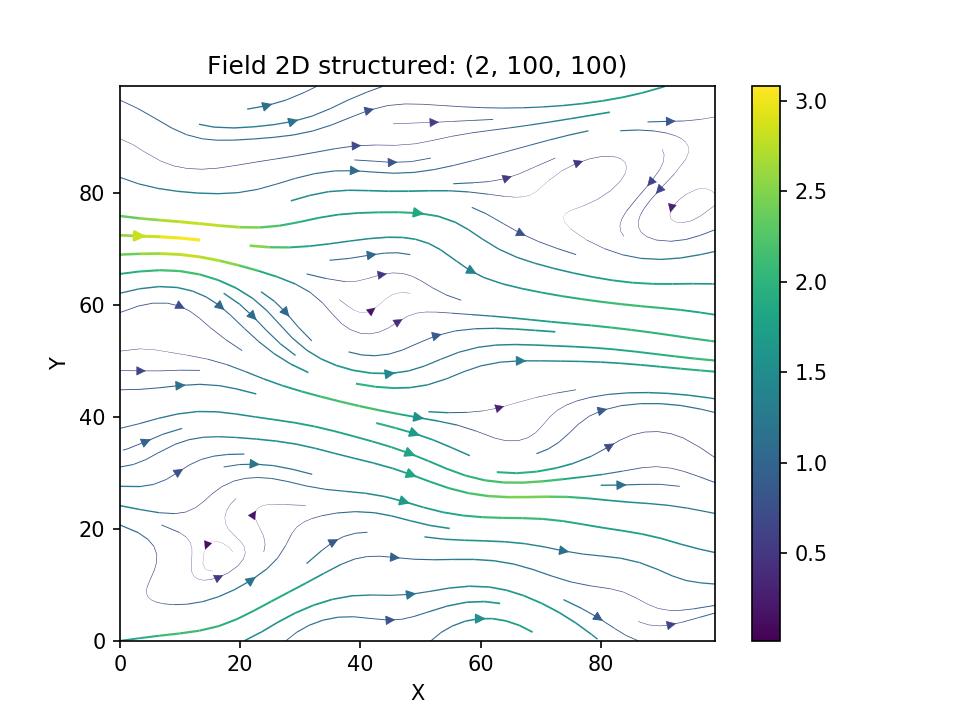

-## Incompressible Vector Field Generation

-

-Using the original [Kraichnan method][kraichnan_link], incompressible random

-spatial vector fields can be generated.

-

-

-### Example

-

-```python

-import numpy as np

-import gstools as gs

-x = np.arange(100)

-y = np.arange(100)

-model = gs.Gaussian(dim=2, var=1, len_scale=10)

-srf = gs.SRF(model, generator='VectorField', seed=19841203)

-srf((x, y), mesh_type='structured')

-srf.plot()

-```

-

-yielding

-

-

- -

-

-

-

-[kraichnan_link]: https://doi.org/10.1063/1.1692799

-

-

-## VTK/PyVista Export

-

-After you have created a field, you may want to save it to file, so we provide

-a handy [VTK][vtk_link] export routine using the `.vtk_export()` or you could

-create a VTK/PyVista dataset for use in Python with to `.to_pyvista()` method:

-

-```python

-import gstools as gs

-x = y = range(100)

-model = gs.Gaussian(dim=2, var=1, len_scale=10)

-srf = gs.SRF(model)

-srf((x, y), mesh_type='structured')

-srf.vtk_export("field") # Saves to a VTK file

-mesh = srf.to_pyvista() # Create a VTK/PyVista dataset in memory

-mesh.plot()

-```

-

-Which gives a RectilinearGrid VTK file ``field.vtr`` or creates a PyVista mesh

-in memory for immediate 3D plotting in Python.

-

-

- -

-

-

-

-## Requirements:

+## Requirements

- [NumPy >= 1.20.0](https://www.numpy.org)

-- [SciPy >= 1.1.0](https://www.scipy.org/scipylib)

-- [hankel >= 1.0.0](https://github.com/steven-murray/hankel)

-- [emcee >= 3.0.0](https://github.com/dfm/emcee)

-- [pyevtk >= 1.1.1](https://github.com/pyscience-projects/pyevtk)

-- [meshio >= 5.1.0](https://github.com/nschloe/meshio)

-

-### Optional

-

-- [GSTools-Core >= 0.2.0](https://github.com/GeoStat-Framework/GSTools-Core)

-- [matplotlib](https://matplotlib.org)

-- [pyvista](https://docs.pyvista.org/)

## Contact

@@ -368,28 +83,4 @@ You can contact us via .

[LGPLv3][license_link] © 2018-2024

-[pip_link]: https://pypi.org/project/gstools

-[conda_link]: https://docs.conda.io/en/latest/miniconda.html

-[conda_forge_link]: https://github.com/conda-forge/gstools-feedstock#installing-gstools

-[conda_pip]: https://docs.conda.io/projects/conda/en/latest/user-guide/tasks/manage-pkgs.html#installing-non-conda-packages

-[pipiflag]: https://pip-python3.readthedocs.io/en/latest/reference/pip_install.html?highlight=i#cmdoption-i

-[winpy_link]: https://winpython.github.io/

-[license_link]: https://github.com/GeoStat-Framework/GSTools/blob/main/LICENSE

-[cov_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/stable/generated/gstools.covmodel.CovModel.html#gstools.covmodel.CovModel

-[stable_link]: https://en.wikipedia.org/wiki/Stable_distribution

-[doc_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/stable/

-[doc_install_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/stable/#pip

-[tut_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/stable/tutorials.html

-[tut1_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/stable/examples/01_random_field/index.html

-[tut2_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/stable/examples/02_cov_model/index.html

-[tut3_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/stable/examples/03_variogram/index.html

-[tut4_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/stable/examples/04_vector_field/index.html

-[tut5_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/stable/examples/05_kriging/index.html

-[tut6_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/stable/examples/06_conditioned_fields/index.html

-[tut7_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/stable/examples/07_transformations/index.html

-[tut8_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/stable/examples/08_geo_coordinates/index.html

-[tut9_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/stable/examples/09_spatio_temporal/index.html

-[tut10_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/stable/examples/10_normalizer/index.html

-[tut0_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/stable/examples/00_misc/index.html

-[cor_link]: https://en.wikipedia.org/wiki/Autocovariance#Normalization

-[vtk_link]: https://www.vtk.org/

+[license_link]: https://github.com/GeoStat-Framework/GSTools-Cython/blob/main/LICENSE

diff --git a/docs/source/api.rst b/docs/source/api.rst

index fe12233b0..8364cf371 100644

--- a/docs/source/api.rst

+++ b/docs/source/api.rst

@@ -1,8 +1,8 @@

-===========

-GSTools API

-===========

+==================

+GSTools-Cython API

+==================

-.. automodule:: gstools

+.. automodule:: gstools_cython

.. raw:: latex

diff --git a/docs/source/conf.py b/docs/source/conf.py

index e89928fc9..7d98e0c30 100644

--- a/docs/source/conf.py

+++ b/docs/source/conf.py

@@ -33,7 +33,7 @@

# local module should not be added to sys path if it's installed on RTFD

# see: https://stackoverflow.com/a/31882049/6696397

# sys.path.insert(0, os.path.abspath("../../"))

-from gstools import __version__ as ver

+from gstools_cython import __version__ as ver

def skip(app, what, name, obj, skip, options):

@@ -66,9 +66,7 @@ def setup(app):

"sphinx.ext.autosummary",

"sphinx.ext.napoleon", # parameters look better than with numpydoc only

"numpydoc",

- "sphinx_gallery.gen_gallery",

"m2r2",

- "sphinxcontrib.youtube",

]

# autosummaries from source-files

@@ -109,7 +107,7 @@ def setup(app):

# General information about the project.

curr_year = datetime.datetime.now().year

-project = "GSTools"

+project = "GSTools-Cython"

copyright = f"2018 - {curr_year}, Sebastian Müller, Lennart Schüler"

author = "Sebastian Müller, Lennart Schüler"

@@ -217,8 +215,8 @@ def setup(app):

latex_documents = [

(

master_doc,

- "GeoStatTools.tex",

- "GeoStatTools Documentation",

+ "GeoStatTools-Cython.tex",

+ "GeoStatTools-Cython Documentation",

"Sebastian Müller, Lennart Schüler",

"manual",

)

@@ -230,7 +228,13 @@ def setup(app):

# One entry per manual page. List of tuples

# (source start file, name, description, authors, manual section).

man_pages = [

- (master_doc, "geostattools", "GeoStatTools Documentation", [author], 1)

+ (

+ master_doc,

+ "geostattools-cython",

+ "GeoStatTools-Cython Documentation",

+ [author],

+ 1,

+ )

]

@@ -242,11 +246,11 @@ def setup(app):

texinfo_documents = [

(

master_doc,

- "GeoStatTools",

- "GeoStatTools Documentation",

+ "GeoStatTools-Cython",

+ "GeoStatTools-Cython Documentation",

author,

- "GeoStatTools",

- "Geo-statistical toolbox.",

+ "GeoStatTools-Cython",

+ "Cython backend for GSTools.",

"Miscellaneous",

)

]

@@ -260,73 +264,4 @@ def setup(app):

intersphinx_mapping = {

"Python": ("https://docs.python.org/", None),

"NumPy": ("https://numpy.org/doc/stable/", None),

- "SciPy": ("https://docs.scipy.org/doc/scipy/", None),

- "matplotlib": ("https://matplotlib.org/stable/", None),

- "hankel": ("https://hankel.readthedocs.io/en/latest/", None),

- "emcee": ("https://emcee.readthedocs.io/en/latest/", None),

-}

-

-# -- Sphinx Gallery Options

-from sphinx_gallery.sorting import FileNameSortKey

-

-# Use pyvista's image scraper for example gallery

-# import pyvista

-# https://github.com/tkoyama010/pyvista-doc-translations/blob/85c835a3ada3a2adefac06ba70e15a101ffa9162/conf.py#L21

-# https://github.com/simpeg/discretize/blob/f414dd7ee7c5ba9a141cb2c37d4b71fdc531eae8/docs/conf.py#L334

-# Make sure off screen is set to true when building locally

-# pyvista.OFF_SCREEN = True

-# # necessary when building the sphinx gallery

-# pyvista.BUILDING_GALLERY = True

-# # Optional - set parameters like theme or window size

-# pyvista.set_plot_theme("document")

-

-sphinx_gallery_conf = {

- # "image_scrapers": ("pyvista", "matplotlib"),

- "remove_config_comments": True,

- # only show "print" output as output

- "capture_repr": (),

- # path to your examples scripts

- "examples_dirs": [

- "../../examples/00_misc/",

- "../../examples/01_random_field/",

- "../../examples/02_cov_model/",

- "../../examples/03_variogram/",

- "../../examples/04_vector_field/",

- "../../examples/05_kriging/",

- "../../examples/06_conditioned_fields/",

- "../../examples/07_transformations/",

- "../../examples/08_geo_coordinates/",

- "../../examples/09_spatio_temporal/",

- "../../examples/10_normalizer/",

- ],

- # path where to save gallery generated examples

- "gallery_dirs": [

- "examples/00_misc/",

- "examples/01_random_field/",

- "examples/02_cov_model/",

- "examples/03_variogram/",

- "examples/04_vector_field/",

- "examples/05_kriging/",

- "examples/06_conditioned_fields/",

- "examples/07_transformations/",

- "examples/08_geo_coordinates/",

- "examples/09_spatio_temporal/",

- "examples/10_normalizer/",

- ],

- # Pattern to search for example files

- "filename_pattern": r"\.py",

- # Remove the "Download all examples" button from the top level gallery

- "download_all_examples": False,

- # Sort gallery example by file name instead of number of lines (default)

- "within_subsection_order": FileNameSortKey,

- # directory where function granular galleries are stored

- "backreferences_dir": None,

- # Modules for which function level galleries are created. In

- "doc_module": "gstools",

- # "first_notebook_cell": (

- # "%matplotlib inline\n"

- # "from pyvista import set_plot_theme\n"

- # "set_plot_theme('document')"

- # ),

- "matplotlib_animations": True,

}

diff --git a/docs/source/contents.rst b/docs/source/contents.rst

index 3224356ee..402d48908 100644

--- a/docs/source/contents.rst

+++ b/docs/source/contents.rst

@@ -7,6 +7,5 @@ Contents

:maxdepth: 3

index

- tutorials

api

changelog

diff --git a/docs/source/index.rst b/docs/source/index.rst

index ecad05830..3bd447c43 100644

--- a/docs/source/index.rst

+++ b/docs/source/index.rst

@@ -1,459 +1 @@

-==================

-GSTools Quickstart

-==================

-

-.. image:: https://raw.githubusercontent.com/GeoStat-Framework/GSTools/main/docs/source/pics/gstools.png

- :width: 150px

- :align: center

-

-.. only:: html

-

- **Get in Touch!**

-

- |GH-Discussions| |Slack-Swung| |Gitter-GSTools| |Email| |Twitter|

-

- **Youtube Tutorial on GSTools**

-

- .. youtube:: qZBJ-AZXq6Q

- :width: 100%

-

- |

-

-Purpose

-=======

-

-GeoStatTools provides geostatistical tools for various purposes:

-

-- random field generation

-- simple, ordinary, universal and external drift kriging

-- conditioned field generation

-- incompressible random vector field generation

-- (automated) variogram estimation and fitting

-- directional variogram estimation and modelling

-- data normalization and transformation

-- many readily provided and even user-defined covariance models

-- metric spatio-temporal modelling

-- plotting and exporting routines

-

-

-Installation

-============

-

-conda

------

-

-GSTools can be installed via

-`conda `_ on Linux, Mac, and

-Windows.

-Install the package by typing the following command in a command terminal:

-

-.. code-block:: none

-

- conda install gstools

-

-In case conda forge is not set up for your system yet, see the easy to follow

-instructions on `conda forge `_.

-Using conda, the parallelized version of GSTools should be installed.

-

-

-pip

----

-

-GSTools can be installed via `pip `_

-on Linux, Mac, and Windows.

-On Windows you can install `WinPython `_ to get

-Python and pip running.

-Install the package by typing the following into command in a command terminal:

-

-.. code-block:: none

-

- pip install gstools

-

-To get the latest development version you can install it directly from GitHub:

-

-.. code-block:: none

-

- pip install git+git://github.com/GeoStat-Framework/GSTools.git@main

-

-If something went wrong during installation, try the :code:`-I` `flag from pip `_.

-

-**Speeding up GSTools by parallelization**

-

-To enable the OpenMP support, you have to provide a C compiler and OpenMP.

-Parallel support is controlled by an environment variable ``GSTOOLS_BUILD_PARALLEL``,

-that can be ``0`` or ``1`` (interpreted as ``0`` if not present).

-GSTools then needs to be installed from source:

-

-.. code-block:: none

-

- export GSTOOLS_BUILD_PARALLEL=1

- pip install --no-binary=gstools gstools

-

-Note, that the ``--no-binary=gstools`` option forces pip to not use a wheel for GSTools.

-

-For the development version, you can do almost the same:

-

-.. code-block:: none

-

- export GSTOOLS_BUILD_PARALLEL=1

- pip install git+git://github.com/GeoStat-Framework/GSTools.git@main

-

-The number of parallel threads can be set with the global variable `config.NUM_THREADS`.

-

-**Using experimental GSTools-Core for even more speed**

-

-You can install the optional dependency `GSTools-Core `_,

-which is a re-implementation of the main algorithms used in GSTools. The new

-package uses the language Rust and it should be faster (in some cases by orders

-of magnitude), safer, and it will potentially completely replace the current

-standard implementation in Cython. Once the package GSTools-Core is available

-on your machine, it will be used by default. In case you want to switch back to

-the Cython implementation, you can set :code:`gstools.config.USE_RUST=False` in

-your code. This also works at runtime. You can install the optional dependency

-e.g. by

-

-.. code-block:: none

-

- pip install gstools[rust]

-

-or by manually installing the package

-

-.. code-block:: none

-

- pip install gstools-core

-

-GSTools-Core will automatically use all your cores in parallel, without having

-to use OpenMP or a local C compiler.

-In case you want to restrict the number of threads used, you can use the

-global variable `config.NUM_THREADS` to the desired number.

-

-

-Citation

-========

-

-If you are using GSTools in your publication please cite our paper:

-

- Müller, S., Schüler, L., Zech, A., and Heße, F.: GSTools v1.3: a toolbox for geostatistical modelling in Python, Geosci. Model Dev., 15, 3161–3182, https://doi.org/10.5194/gmd-15-3161-2022, 2022.

-

-You can cite the Zenodo code publication of GSTools by:

-

- Sebastian Müller & Lennart Schüler. GeoStat-Framework/GSTools. Zenodo. https://doi.org/10.5281/zenodo.1313628

-

-If you want to cite a specific version, have a look at the `Zenodo site `__.

-

-

-Tutorials and Examples

-======================

-

-The documentation also includes some `tutorials `__,

-showing the most important use cases of GSTools, which are

-

-- `Random Field Generation `__

-- `The Covariance Model `__

-- `Variogram Estimation `__

-- `Random Vector Field Generation `__

-- `Kriging `__

-- `Conditioned random field generation `__

-- `Field transformations `__

-- `Geographic Coordinates `__

-- `Spatio-Temporal Modelling `__

-- `Normalizing Data `__

-- `Miscellaneous examples `__

-

-

-Spatial Random Field Generation

-===============================

-

-The core of this library is the generation of spatial random fields.

-These fields are generated using the randomisation method, described by

-`Heße et al. 2014 `_.

-

-

-Examples

---------

-

-Gaussian Covariance Model

-^^^^^^^^^^^^^^^^^^^^^^^^^

-

-This is an example of how to generate a 2 dimensional spatial random field (:any:`SRF`)

-with a :any:`Gaussian` covariance model.

-

-.. code-block:: python

-

- import gstools as gs

- # structured field with a size 100x100 and a grid-size of 1x1

- x = y = range(100)

- model = gs.Gaussian(dim=2, var=1, len_scale=10)

- srf = gs.SRF(model)

- srf((x, y), mesh_type='structured')

- srf.plot()

-

-.. image:: https://raw.githubusercontent.com/GeoStat-Framework/GSTools/main/docs/source/pics/gau_field.png

- :width: 400px

- :align: center

-

-GSTools also provides support for `geographic coordinates `_.

-This works perfectly well with `cartopy `_.

-

-.. code-block:: python

-

- import matplotlib.pyplot as plt

- import cartopy.crs as ccrs

- import gstools as gs

- # define a structured field by latitude and longitude

- lat = lon = range(-80, 81)

- model = gs.Gaussian(latlon=True, len_scale=777, geo_scale=gs.KM_SCALE)

- srf = gs.SRF(model, seed=12345)

- field = srf.structured((lat, lon))

- # Orthographic plotting with cartopy

- ax = plt.subplot(projection=ccrs.Orthographic(-45, 45))

- cont = ax.contourf(lon, lat, field, transform=ccrs.PlateCarree())

- ax.coastlines()

- ax.set_global()

- plt.colorbar(cont)

-

-.. image:: https://github.com/GeoStat-Framework/GeoStat-Framework.github.io/raw/master/img/GS_globe.png

- :width: 400px

- :align: center

-

-A similar example but for a three dimensional field is exported to a

-`VTK `__ file, which can be visualized with

-`ParaView `_ or

-`PyVista `__ in Python:

-

-.. code-block:: python

-

- import gstools as gs

- # structured field with a size 100x100x100 and a grid-size of 1x1x1

- x = y = z = range(100)

- model = gs.Gaussian(dim=3, len_scale=[16, 8, 4], angles=(0.8, 0.4, 0.2))

- srf = gs.SRF(model)

- srf((x, y, z), mesh_type='structured')

- srf.vtk_export('3d_field') # Save to a VTK file for ParaView

-

- mesh = srf.to_pyvista() # Create a PyVista mesh for plotting in Python

- mesh.contour(isosurfaces=8).plot()

-

-.. image:: https://github.com/GeoStat-Framework/GeoStat-Framework.github.io/raw/master/img/GS_pyvista.png

- :width: 400px

- :align: center

-

-

-Estimating and fitting variograms

-=================================

-

-The spatial structure of a field can be analyzed with the variogram, which contains the same information as the covariance function.

-

-All covariance models can be used to fit given variogram data by a simple interface.

-

-

-Examples

---------

-

-This is an example of how to estimate the variogram of a 2 dimensional unstructured field and estimate the parameters of the covariance

-model again.

-

-.. code-block:: python

-

- import numpy as np

- import gstools as gs

- # generate a synthetic field with an exponential model

- x = np.random.RandomState(19970221).rand(1000) * 100.

- y = np.random.RandomState(20011012).rand(1000) * 100.

- model = gs.Exponential(dim=2, var=2, len_scale=8)

- srf = gs.SRF(model, mean=0, seed=19970221)

- field = srf((x, y))

- # estimate the variogram of the field

- bin_center, gamma = gs.vario_estimate((x, y), field)

- # fit the variogram with a stable model. (no nugget fitted)

- fit_model = gs.Stable(dim=2)

- fit_model.fit_variogram(bin_center, gamma, nugget=False)

- # output

- ax = fit_model.plot(x_max=max(bin_center))

- ax.scatter(bin_center, gamma)

- print(fit_model)

-

-Which gives:

-

-.. code-block:: python

-

- Stable(dim=2, var=1.85, len_scale=7.42, nugget=0.0, anis=[1.0], angles=[0.0], alpha=1.09)

-

-.. image:: https://raw.githubusercontent.com/GeoStat-Framework/GeoStat-Framework.github.io/master/img/GS_vario_est.png

- :width: 400px

- :align: center

-

-

-Kriging and Conditioned Random Fields

-=====================================

-

-An important part of geostatistics is Kriging and conditioning spatial random

-fields to measurements. With conditioned random fields, an ensemble of field realizations

-with their variability depending on the proximity of the measurements can be generated.

-

-

-Example

--------

-

-For better visualization, we will condition a 1d field to a few "measurements",

-generate 100 realizations and plot them:

-

-.. code-block:: python

-

- import numpy as np

- import matplotlib.pyplot as plt

- import gstools as gs

-

- # conditions

- cond_pos = [0.3, 1.9, 1.1, 3.3, 4.7]

- cond_val = [0.47, 0.56, 0.74, 1.47, 1.74]

-

- # conditioned spatial random field class

- model = gs.Gaussian(dim=1, var=0.5, len_scale=2)

- krige = gs.krige.Ordinary(model, cond_pos, cond_val)

- cond_srf = gs.CondSRF(krige)

- # same output positions for all ensemble members

- grid_pos = np.linspace(0.0, 15.0, 151)

- cond_srf.set_pos(grid_pos)

-

- # seeded ensemble generation

- seed = gs.random.MasterRNG(20170519)

- for i in range(100):

- field = cond_srf(seed=seed(), store=f"field_{i}")

- plt.plot(grid_pos, field, color="k", alpha=0.1)

- plt.scatter(cond_pos, cond_val, color="k")

- plt.show()

-

-.. image:: https://raw.githubusercontent.com/GeoStat-Framework/GSTools/main/docs/source/pics/cond_ens.png

- :width: 600px

- :align: center

-

-

-User defined covariance models

-==============================

-

-One of the core-features of GSTools is the powerful

-:any:`CovModel`

-class, which allows to easy define covariance models by the user.

-

-

-Example

--------

-

-Here we re-implement the Gaussian covariance model by defining just the

-`correlation `_ function,

-which takes a non-dimensional distance :class:`h = r/l`

-

-.. code-block:: python

-

- import numpy as np

- import gstools as gs

- # use CovModel as the base-class

- class Gau(gs.CovModel):

- def cor(self, h):

- return np.exp(-h**2)

-

-And that's it! With :class:`Gau` you now have a fully working covariance model,

-which you could use for field generation or variogram fitting as shown above.

-

-

-Incompressible Vector Field Generation

-======================================

-

-Using the original `Kraichnan method `_, incompressible random

-spatial vector fields can be generated.

-

-

-Example

--------

-

-.. code-block:: python

-

- import numpy as np

- import gstools as gs

- x = np.arange(100)

- y = np.arange(100)

- model = gs.Gaussian(dim=2, var=1, len_scale=10)

- srf = gs.SRF(model, generator='VectorField', seed=19841203)

- srf((x, y), mesh_type='structured')

- srf.plot()

-

-yielding

-

-.. image:: https://raw.githubusercontent.com/GeoStat-Framework/GSTools/main/docs/source/pics/vec_srf_tut_gau.png

- :width: 600px

- :align: center

-

-

-VTK/PyVista Export

-==================

-

-After you have created a field, you may want to save it to file, so we provide

-a handy `VTK `_ export routine using the :class:`.vtk_export()` or you could

-create a VTK/PyVista dataset for use in Python with to :class:`.to_pyvista()` method:

-

-.. code-block:: python

-

- import gstools as gs

- x = y = range(100)

- model = gs.Gaussian(dim=2, var=1, len_scale=10)

- srf = gs.SRF(model)

- srf((x, y), mesh_type='structured')

- srf.vtk_export("field") # Saves to a VTK file

- mesh = srf.to_pyvista() # Create a VTK/PyVista dataset in memory

- mesh.plot()

-

-Which gives a RectilinearGrid VTK file :file:`field.vtr` or creates a PyVista mesh

-in memory for immediate 3D plotting in Python.

-

-.. image:: https://raw.githubusercontent.com/GeoStat-Framework/GSTools/main/docs/source/pics/pyvista_export.png

- :width: 600px

- :align: center

-

-

-Requirements

-============

-

-- `NumPy >= 1.20.0 `_

-- `SciPy >= 1.1.0 `_

-- `hankel >= 1.0.0 `_

-- `emcee >= 3.0.0 `_

-- `pyevtk >= 1.1.1 `_

-- `meshio >= 5.1.0 `_

-

-

-Optional

---------

-

-- `GSTools-Core >= 0.2.0 `_

-- `matplotlib `_

-- `pyvista `_

-

-

-Contact

--------

-

-You can contact us via `info@geostat-framework.org `_.

-

-

-License

-=======

-

-`LGPLv3 `_

-

-

-.. |GH-Discussions| image:: https://img.shields.io/badge/GitHub-Discussions-f6f8fa?logo=github&style=flat

- :alt: GH-Discussions

- :target: https://github.com/GeoStat-Framework/GSTools/discussions